Quantum Computing: Hype or the Next Big Leap

2 The Hype: Why People Are Buzzing About Quantum

Quantum computing promises to redefine what’s computationally possible. Instead of bits (0s and 1s), quantum computers use qubits, which can represent 0 and 1 at the same time thanks to superposition and entanglement.

3 What it could revolutionize:

Cryptography: Cracking encryption that would take classical computers thousands of years.

Drug discovery: Simulating molecules and proteins with atomic precision.

Supply chain optimization: Solving complex logistical problems instantly.

AI acceleration: Handling massive datasets with quantum parallelism.

4 Companies going all in:

Google claims quantum supremacy (with a 200-second calculation).

IBM, Microsoft, Intel, and startups like Rigetti and IonQ are investing big time.

Governments (especially the U.S. and China) are pouring billions into quantum R&D.

So yeah, the potential is enormous — if we can get it to scale.

5 The Reality Check: Why It Might Be Overhyped (For Now)

Quantum is not quite plug-and-play yet. It’s still early-stage science, not ready for mainstream deployment.

6 Key challenges:

Error rates: Qubits are extremely sensitive to noise — they lose coherence fast.

Hardware: Quantum systems require supercooling near absolute zero. Not exactly desktop-ready.

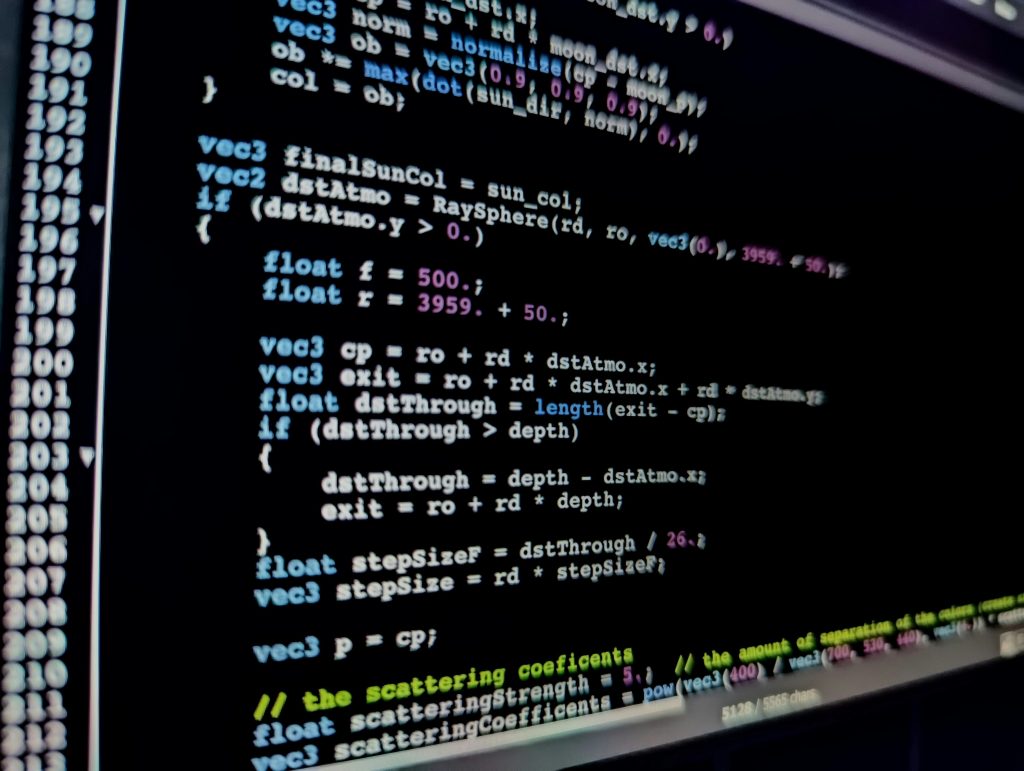

Algorithms: Most useful quantum algorithms are still in theoretical stages.

Practical use cases: Outside of niche simulations, classical computers still rule in most real-world tasks.

Bottom line:

We’re in the “vacuum tube” era of quantum computing — think early 1940s for classical computing.

True commercial advantage? Still 5–15 years away by most expert estimates.

So… Hype or Leap? It’s Both — Just Not Yet

TL;DR:

- Quantum computing is the next big leap in theory and in the lab.

- But in practice, we’re years away from broad, impactful applications.

- It’s like where AI was in the 1980s: promising, but not yet practical. Then one day… boom.