Federated Learning: Privacy-Preserving AI Training

As AI becomes more integrated into sensitive areas like healthcare, finance, and personal devices, data privacy has become a major concern.

Federated Learning offers a powerful solution allowing AI models to learn from data without that data ever leaving your device.

2 What Is Federated Learning?

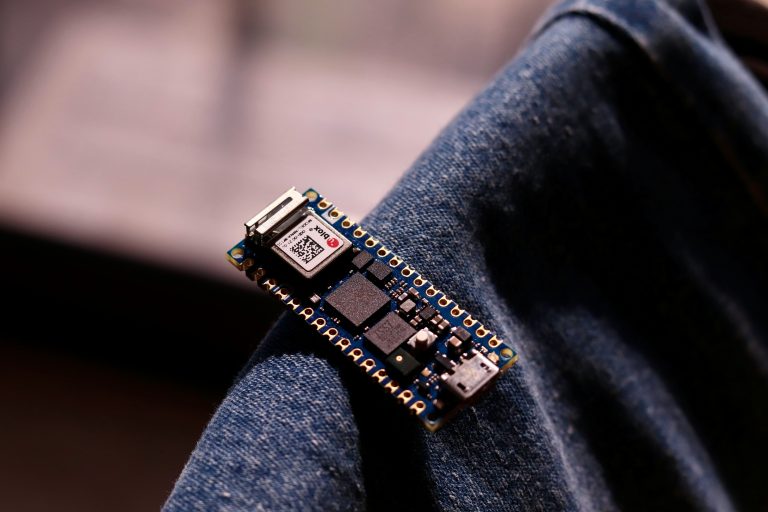

Federated Learning (FL) is a machine learning technique where a model is trained across many decentralized devices (such as smartphones or IoT devices) without sharing the actual data.

How It Works:

1 A global model is initialized and sent to individual devices.

2 Each device trains the model locally using its own data.

3 Instead of sending data to the server, devices send model updates (like improved weights) back to the central server.

4 The server aggregates all the updates to improve the global model.

5 The updated global model is redistributed to devices, and the cycle repeats.

In short: Your phone learns from your personal data but only the “lessons” (model improvements) are shared, not the data itself.

3 Why Is Federated Learning Important?

4 Data Privacy and Security

1 Raw data never leaves your device.

2 Reduces risks of data breaches, leaks, and misuse.

5 Compliance with Regulations

1 Federated learning helps meet strict privacy laws like GDPR (Europe) and HIPAA (US healthcare).

6 Reduced Bandwidth Usage

1 Instead of uploading heavy datasets, only small model updates are transmitted, saving network resources.

7 Personalization

1 Models can be adapted to the unique data of each user, offering more personalized AI experiences without compromising privacy.

8 Key Technologies and Concepts Behind Federated Learning

1 Secure Aggregation: Cryptographic techniques ensure that model updates are encrypted and can only be decrypted after aggregation, protecting individual contributions.

2 Differential Privacy: Adding “noise” to model updates to ensure that even if updates are intercepted, it’s hard to trace back to a single user’s data.

3 Federated Averaging (FedAvg): A basic algorithm that averages model updates from all participating devices to update the global model.

4 Edge Computing: Since training happens locally, Edge AI technologies are often integrated with federated learning systems.

9 Challenges in Federated Learning

While powerful, federated learning faces several hurdles:

1 Device Heterogeneity: Different devices have different computing power, memory, and connectivity, making coordination complex.

2 Communication Costs: Sending model updates can still be costly on a large scale, especially over mobile networks.

3 System Reliability: Devices may drop out of training (due to battery loss, connectivity issues), leading to incomplete updates.

4 Privacy Risks: Even model updates can sometimes leak information if not carefully protected (known as “model inversion attacks”).

Ongoing research is focused on making federated learning more robust, secure, and efficient.

10 Future of Federated Learning

1 Cross-Silo Federated Learning: Used between organizations (e.g., hospitals, banks) that individually have lots of data.

2 Cross-Device Federated Learning: Used across millions of devices (e.g., smartphones).

3 Federated Learning + Blockchain: Emerging research explores combining decentralized learning with blockchain for added security and transparency.

4 Personalized Federated Learning: Developing models that are even more customized to each user while still benefiting from global training.

Conclusion

Federated Learning offers a promising path to building powerful AI systems without sacrificing user privacy.

By keeping data on-device and focusing on sharing knowledge rather than raw information, it reimagines how machine learning can work in a privacy-conscious world.